Take the perspective of a few years ago, before Google invested in developing ASICs. In this particular case, however, I think the differential technological advancement model is wrong in an interesting way. I have mixed feelings about differential technological development as applied to AGI while the safety/control research has a long way to go, humanity faces a lot of serious problems which AGI could solve. Under a model of differential technological development, the creation of TPUs could be bad if it accelerates progress in AI capability more than it accelerates progress in AI safety.

#FP32 VS FP64 HOW TO#

Some people model AGI development as a race between capability and control, and want us to know more about how to control AGIs before they’re created. If your timeline estimate was based on extrapolating Moore’s Law or the rate of progress excluding the past year, then this should make you expect AGI to arrive sooner.

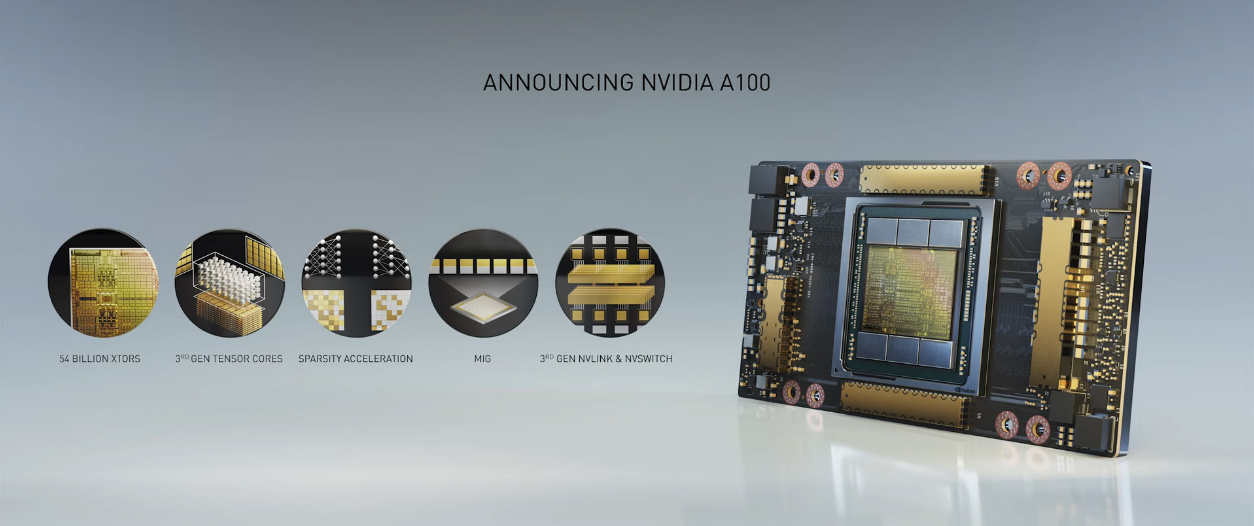

If your estimate of when there will be human-comparable or superintelligent AGI was based on the very high rate of progress in the past year, then this should make you expect AGI to arrive later, because it explains some of that progress with a one-time gain that can’t be repeated. This is fairly straightforward researchers can do more experiments with more computing power, and algorithms that stretched past the limits of available computing power before became possible. The development of TPUs accelerated the timeline of AGI development. So, what does this mean for AGI timelines, and how does the existence of TPUs affect the outcome when AGI does come into existence? TPU is tailored to machine learning applications, allowing the chip to be more tolerant of reduced computational precision, which means it requires fewer transistors per operation. We’ve been running TPUs inside our data centers for more than a year, and have found them to deliver an order of magnitude better-optimized performance per watt for machine learning. Last Wednesday, Google announced that AlphaGo was not powered by GPUs as everyone thought, but by Google’s own custom ASICs (application-specific integrated circuits), which they are calling “tensor processing units” (TPUs). The L2 cache is another mystery, but considering that the GA100 already had over 40MB, it’s probable that we’ll see 96MB on the GH100, the same as the AD102.The following article was written by Jim Babcock and originally posted on Concept Space Cartography. HBM 3 allows for 24GB stacks across a 1,024-bit bus which means NVIDIA can use up to 16-24GB stacks acoss six 1,204-bit memory controllers. The memory pool will also grow quite significantly with Hopper, increasing from “just” 40GB HBM2 on the GA100 to at least 128GB of HBM3 on the GH100. If NVIDIA wants to overcome AMD’s recently announced Instinct MI200 family, then these figures are kind of necessary. Running the numbers, you get a total of 288 SMs, 144 TPCs, 16 GPCs, resulting in an overall core count of 18,432 and 9,216 for the FP32 and FP64 ALUs, respectively. If Hopper will actually leverage a chiplet approach, then we’re probably looking at just one of the two dies making up the entire SKU. This increases the overall ALU count by 30-35%, bringing the FP32 figure to 9,216, and FP64 to 4,608.

#FP32 VS FP64 UPGRADE#

The key upgrade over Ampere is the one additional TPC per SM, bringing up the total to 72 (each GPC packing 9 TPCs).

The GH100 full-fat die will pack a total of 144 SMs (Streaming Multiprocessors) across 8 GPCs (Graphics Processing Clusters), and 72 TPCs (Texture Processing Clusters). Based on a chiplet design, the GH100 will accelerate inferencing and other machine learning workloads, while also pushing record big data and HPC performance. NVIDIA plans to launch its next-gen Hopper data center GPUs later this year, with a possible announcement at GTC 2022 in late March.

0 kommentar(er)

0 kommentar(er)